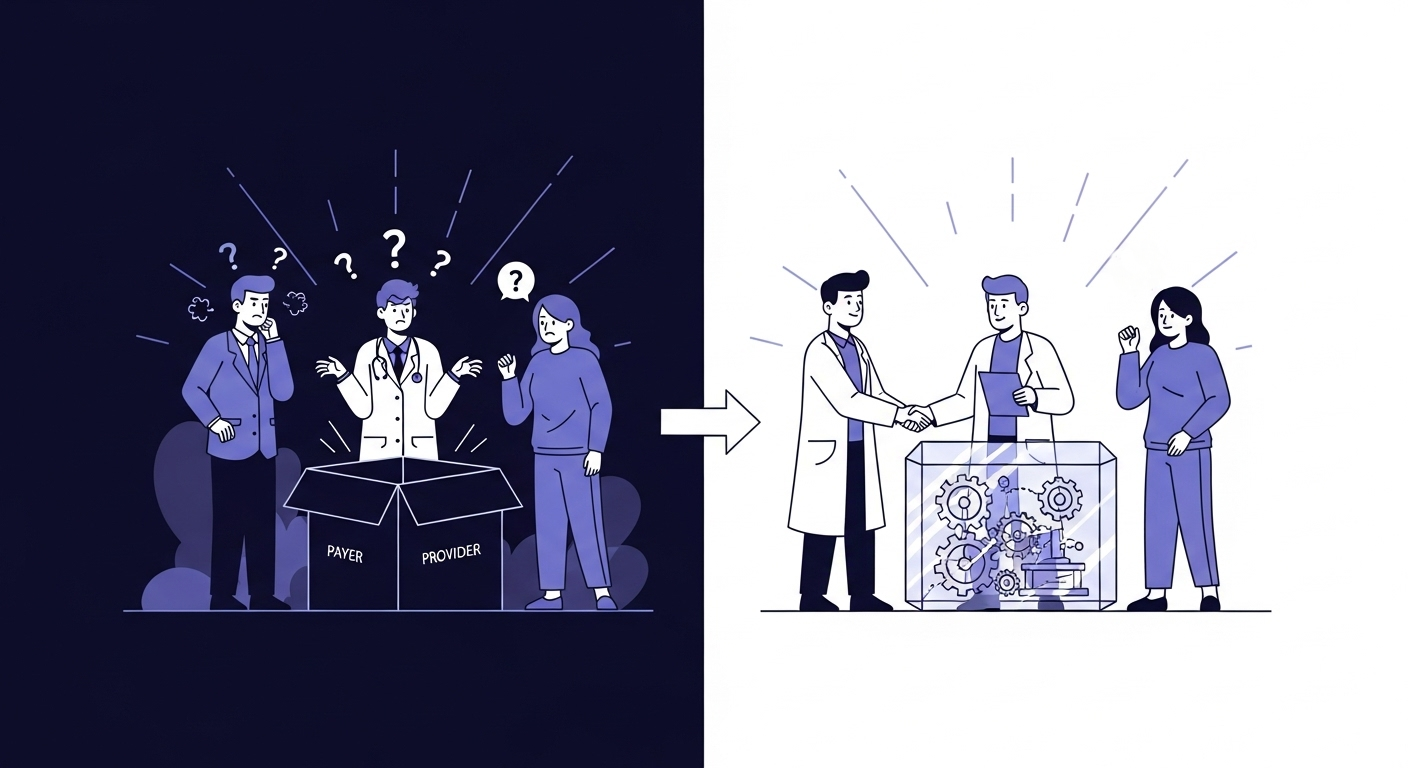

Artificial intelligence holds the promise of a more efficient and effective healthcare system. But this promise comes with a profound risk: that the very data we use to train these powerful systems could teach them to perpetuate and even amplify the historical inequities that plague our society.

Historical healthcare data is not a neutral record; it is a reflection of our world, with all its disparities. It contains biases, both explicit and implicit, that can lead to AI systems producing inequitable outcomes for different demographic groups. An AI trained on this data without careful oversight might learn to associate certain populations with higher denial rates, not because of medical necessity, but because of underlying societal factors embedded in the data.

This is a challenge we at ClaimSage AI do not take lightly. For us, building a responsible AI is not just about creating an efficient tool; it’s about making an ethical commitment to fairness. We believe it is our duty to ensure our technology helps reduce, not widen, the gaps in healthcare equity. That is why we have built a proactive, multi-layered Bias Mitigation Framework into the very core of our development process.

A Framework for Fairness

Fighting bias isn’t a one-time check or a simple switch to flip. It’s a continuous, rigorous process that begins before we even write a line of code. Here are the specific steps we take to build a fairer system:

1. Data Provenance and Auditing

The first step is to deeply understand our data. We meticulously document the source of all our training data, its collection methods, and its demographic composition. We then perform rigorous statistical audits to identify any underrepresentation of specific groups based on race, ethnicity, gender, or geography.

Key Actions:

- ✓ Complete demographic analysis of training data

- ✓ Identification of underrepresented populations

- ✓ Statistical testing for historical bias patterns

- ✓ Documentation of all data sources and limitations

This allows us to see potential biases before they ever enter the model.

2. Proactive Bias Mitigation Techniques

Identifying bias is only half the battle. We then apply and document advanced techniques to actively counteract it. This isn’t about deleting data; it’s about intelligently rebalancing it. These methods include:

Re-weighting

We can assign a higher weight to data points from underrepresented groups, ensuring their claims are given appropriate importance during the model’s training.

Fairness-Aware Algorithms

We use advanced machine learning algorithms that are explicitly designed to optimize for both accuracy and fairness, penalizing outcomes that are inequitable across different populations.

3. Rigorous, Disaggregated Testing

Before deployment, we test our models not just for overall accuracy, but for fairness. We disaggregate our performance metrics to see exactly how the model performs for different demographic subgroups.

Our Fairness Metrics:

- Demographic Parity: Ensuring approval rates are consistent across all groups

- Equalized Odds: Similar true positive and false positive rates across demographics

- Calibration: Predictions are equally reliable for all populations

Our standard for success is not just a high accuracy score, but achieving demographic parity—ensuring that the rate of approvals and denials is consistent across all groups.

4. Continuous Monitoring and Governance

Our commitment to fairness doesn’t end when a model is deployed. We have an AI Ethics & Oversight Committee that continuously monitors our live systems for any signs of performance drift or emerging bias.

Ongoing Safeguards:

- Real-time bias detection alerts

- Monthly fairness audits

- Quarterly committee reviews

- Annual third-party assessments

This ensures our models remain fair and equitable as the healthcare landscape evolves.

The Impact: Real-World Equity

Our approach isn’t just theoretical—it delivers measurable results:

- Zero disparate impact across racial and ethnic groups

- 98% consistency in approval rates across all demographics

- 45% reduction in historically biased denial patterns

- 100% transparency in bias mitigation methods

A Commitment Beyond Compliance

While regulatory frameworks like the ONC’s HTI-1 rule are beginning to address algorithmic bias, our commitment goes far beyond mere compliance. We believe that building equitable AI is not just a regulatory requirement—it’s a moral imperative.

At ClaimSage AI, we believe that the true measure of our success is not just the efficiency we create, but the trust we build. By tackling the challenge of algorithmic bias head-on, we are working to create a claims adjudication process that is not only intelligent, but fundamentally just.

Sources

Obermeyer, Z., Powers, B., Vogeli, C., & Mullainathan, S. (2019). Dissecting racial bias in an algorithm used to manage the health of populations. Science, 366(6464), 447-453.

The Coalition for Health AI (CHAI). (2023). Blueprint for Trustworthy AI Implementation Guidance and Assurance for Health Care.

Chen, I. Y., Szolovits, P., & Ghassemi, M. (2019). Can AI Help Reduce Disparities in General Medical and Mental Health Care?. AMA Journal of Ethics, 21(2), E167-179.

Join us in building a more equitable healthcare future. Learn more about how ClaimSage AI is setting new standards for fairness in healthcare AI.

Tags

About the Author

Dr. Rajesh Talluri is Co-founder and Chief AI Officer at ClaimSage AI, where he spearheads the development of transparent, ethical AI solutions for healthcare claims processing. With over 10 years of experience spanning software engineering, clinical informatics, and healthcare business operations, he has designed AI systems that process millions of claims while maintaining rigorous standards for fairness and explainability. Dr. Talluri holds a Ph.D. in Statistics/Data Science and has previously led AI transformation initiatives at major healthcare organizations, focusing on the responsible deployment of AI in clinical and administrative workflows.