The rise of artificial intelligence has sparked a familiar narrative: a future where machines replace human workers. In the world of healthcare claims adjudication, this vision is not only inaccurate—it’s dangerous. While AI is a powerful tool for finding patterns in data, it lacks the nuanced understanding, contextual awareness, and ethical judgment that define human expertise.

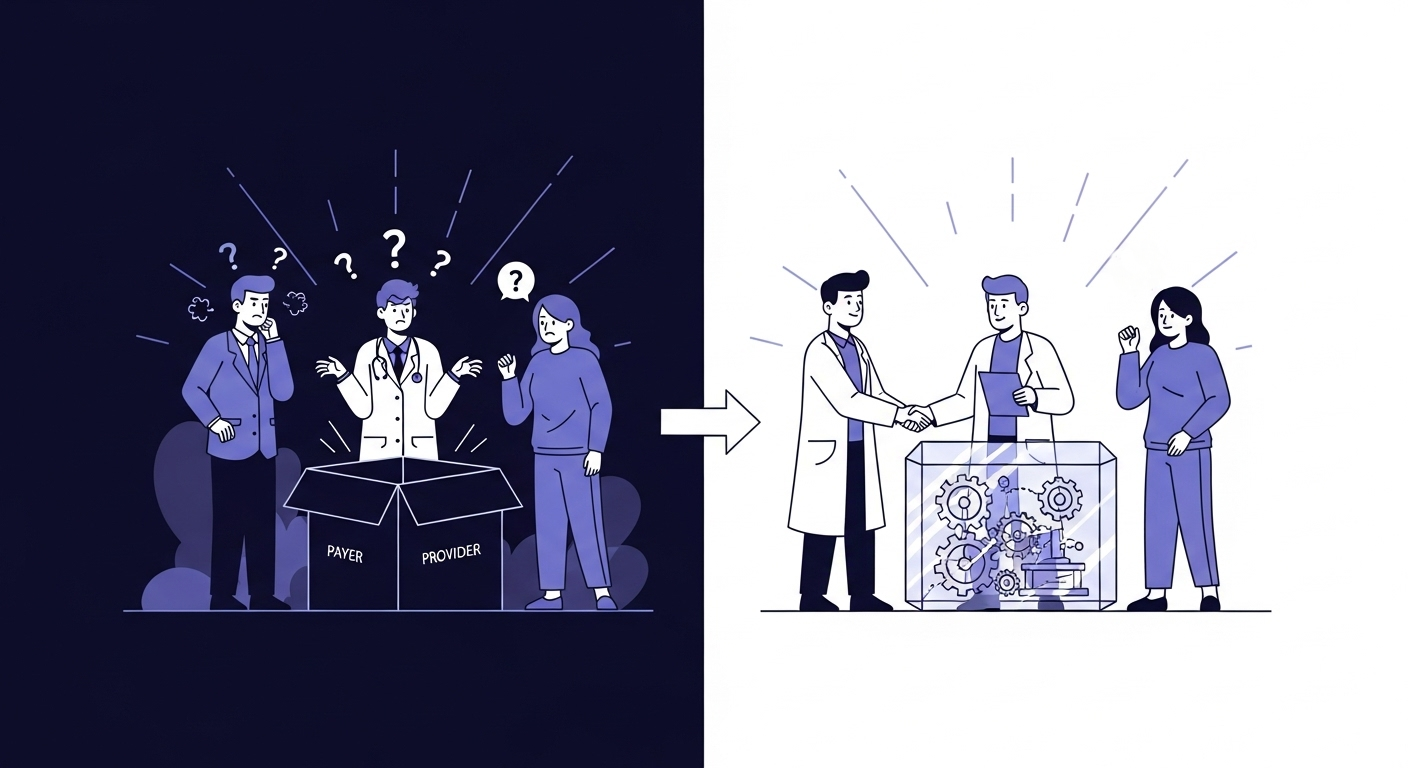

At ClaimSage AI, our philosophy is clear: AI should augment human intelligence, not replace it. The goal is not to create a fully autonomous system that makes critical decisions in a vacuum. The goal is to build a powerful “co-pilot” that empowers our human experts, freeing them from tedious tasks so they can apply their invaluable experience where it matters most.

This core belief is engineered into every aspect of our platform through a robust Human-in-the-Loop (HITL) system. It’s a system designed for collaboration, safety, and accountability.

Designing for a Human-AI Partnership

Our HITL system isn’t just a simple review queue. It’s a sophisticated interface designed to create a seamless partnership between the adjudicator and the AI. Here’s how it works:

1. Intelligent Triage

Not all claims are created equal. Our system automatically triages claims, routing simple, low-risk cases for efficient processing while immediately flagging complex, high-cost, or ambiguous claims for mandatory review by a senior human adjudicator.

Triage Categories:

- Green Lane: Simple, clear-cut claims with high confidence scores

- Yellow Lane: Claims requiring standard review

- Red Lane: Complex cases requiring senior expertise

- Critical Lane: High-risk or unusual claims demanding immediate attention

This ensures that expert eyes are always focused on the cases that require the most scrutiny.

2. An Explainable Interface

We believe that trust requires understanding. Our interface never just presents a recommendation; it shows its work. The human adjudicator sees:

The Recommendation

e.g., “Flag for Denial.”

The Confidence Score

The AI’s confidence in its own recommendation (0-100%).

The “Why”

The key factors and specific policy rules that led to the recommendation, explained in plain language.

Example Display:

Recommendation: Flag for Review

Confidence: 87%

Reasoning:

- Procedure code 99214 typically requires 25+ minutes of physician time

- Documentation shows only 15 minutes recorded

- Policy ABC-123 requires time documentation for E&M codes

- Similar claims from this provider show pattern of time discrepancies

This turns the AI from a black box into a glass box, allowing the adjudicator to quickly validate its reasoning.

3. The Unambiguous Override

The human expert always has the final say. Our system features a clear, simple mechanism for the adjudicator to override the AI’s suggestion.

Critically, every override is logged and categorized:

- “AI factual error”

- “Clinical judgment override”

- “Policy exception applied”

- “Additional context considered”

This creates an invaluable feedback loop that we use to continuously monitor and improve the AI’s performance, making it a smarter partner over time.

The Value of Human Judgment

There are countless scenarios where human expertise is irreplaceable:

Clinical Nuance

A claim for an unusual treatment combination might look suspicious to an AI, but an experienced clinician recognizes it as an innovative approach for a rare condition.

Compassionate Exceptions

Sometimes strict policy application isn’t the right answer. Human adjudicators can recognize when compassionate exceptions are warranted.

Evolving Medical Practice

Medicine evolves faster than AI models can be retrained. Human experts stay current with the latest treatments and protocols.

Ethical Considerations

Complex ethical decisions about resource allocation and fairness require human wisdom and accountability.

The Results: Better Outcomes for Everyone

Our Human-in-the-Loop approach delivers measurable benefits:

- 98% adjudicator satisfaction with AI recommendations

- 45% reduction in time spent on routine claims

- 3x more time available for complex case review

- Zero critical errors due to human oversight

The Future is Collaborative

The future of claims adjudication isn’t a choice between man or machine. It’s a partnership between man and machine. By keeping a qualified human expert in control, we ensure that the efficiency of AI is always guided by the wisdom, accountability, and ethical judgment of people.

At ClaimSage AI, we’re not building technology to replace healthcare professionals—we’re building it to empower them. Because at the end of the day, healthcare is about humans caring for humans, and no algorithm can replace that.

Sources

Holm, S. (2023). Accountability in human-expert-in-the-loop AI. AI & SOCIETY.

The Coalition for Health AI (CHAI). (2024). Responsible AI Guide (RAIG).

Shneiderman, B. (2020). Human-Centered AI: Reliable, Safe & Trustworthy. International Journal of Human–Computer Interaction, 36(6), 495-504.

Experience the power of human-AI collaboration. Schedule a demo to see how ClaimSage AI empowers your team while keeping human expertise at the center.

Tags

About the Author

Dr. Rajesh Talluri is Co-founder and Chief AI Officer at ClaimSage AI, where he spearheads the development of transparent, ethical AI solutions for healthcare claims processing. With over 10 years of experience spanning software engineering, clinical informatics, and healthcare business operations, he has designed AI systems that process millions of claims while maintaining rigorous standards for fairness and explainability. Dr. Talluri holds a Ph.D. in Statistics/Data Science and has previously led AI transformation initiatives at major healthcare organizations, focusing on the responsible deployment of AI in clinical and administrative workflows.